Collab is group of investment fund advisors whose blog posts often apply to managing more than one’s money. The below post on risk is one of them.

If we anticipate risk at every turn we become paralyzed with fear. That said, if we consider it more often than we do, we can prevent a lot of heartache.

Nobody Planned This, Nobody Expected It…

The Battle of Stalingrad was the largest battle in history. With it came equally superlative stories of how people dealt with risk.

One came in late 1942, when a German tank unit sat in reserve on grasslands outside the city. When tanks were desperately needed on the front lines, something happened that surprised everyone: Almost none of the them worked.

Out of 104 tanks in the unit, fewer than 20 were operable. Engineers quickly found the issue, which, if I didn’t read this in a reputable history book, would defy belief. Historian William Craig writes: “During the weeks of inactivity behind the front lines, field mice had nested inside the vehicles and eaten away insulation covering the electrical systems.”

The Germans had the most sophisticated equipment in the world. Yet there they were, defeated by mice.

You can imagine their disbelief. This almost certainly never crossed their minds. What kind of tank designer thinks about mouse protection? Nobody planned this, nobody expected it.

But these things happen all the time.

Risk does not like prophets. It’s not even fond of historians. You can plan for every risk except the things that are too crazy to cross your mind. And those crazy things do the most harm, because they happen more often than you think and you have no plan for how to deal with them.

Risk in most professions is managed by studying what’s common. Maybe 10 topics and their solutions, said slightly differently, dominate business and investing books.

The problem is that historical risks you can study are dwarfed by risks you actually experience. There are a zillion treacherous risks, but most on their own are not common enough to take seriously or collect data on. Like mice destroying tanks.

Search the New York Times’ archives, and you’ll find that the word “unprecedented” has been published once for about every ten instances of the word “common.” Which is a lot. Stanford professor Scott Sagan nailed this when he said, “Things that have never happened before happen all the time.”

(…)

Nobody planned this, nobody expected it. That’s what actual risk is.

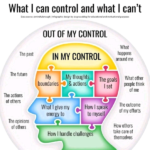

A couple traits serve people well in these situations.

1. Avoiding single points of failure

A rule of thumb is that everything will eventually break. So if a bunch of things rely on one thing, and that thing breaks, you are counting the days to catastrophe.

Single points of failure come in many forms. You can think of them as anything with leverage; a thing that makes other things more effective. Some do a remarkable job avoiding them: Virtually every critical system on airplanes has backups, and the backups often have backups. Modern jets have four redundant electrical systems. You can fly with one engine, and technically land with none, as every jet must be capable of stopping on a runway with its brakes alone, without thrust reverse from its engines. Suspension bridges can lose many of their cables without falling.

(…)

A smart way to spot non-obvious single points of failure comes from the Motley Fool, where I used to work. An employee’s name was drawn out of a hat each month. The winner was required to take 10 days off work with no work communication, no exceptions. This was partly to counter the tendency of unused vacation days to pile up. But it was also a test against single points of failure. You only know how reliant you are on a single employee when that person leaves unexpectedly, with no communication. If only one person in the company knows the password to some critical program, or is the only contact with an important vendor, they will eventually turn into a field mouse that no one knew existed until it’s too late. Find them preemptively, in a controlled way, and you’re better off.

2. Humility when learning from past mistakes

Daniel Kahneman says:

What you should learn when you make a mistake because you did not anticipate something is that the world is difficult to anticipate. That’s the correct lesson to learn from surprises: that the world is surprising.

The idea is to avoid always fighting the last war. If you experience a problem and then fix that problem, you’re only safer to the extent that the same problem was likely to repeat in the future. But you’re worse off if that problem was rare while your fix gives you a false sense of confidence in your overall safety. Like banks shutting down the subprime mortgage department, simultaneously ramping up the subprime auto loan department, and thinking they learned their lesson from the financial crisis.

Learning specific lessons is great. But every painful mistake should generate a sense of, “We need more room for error.” Room for error doesn’t just protect you from risk you’ve experienced in the past; it guards against future risk you haven’t thought of. Which is perhaps the closest thing to a mouse trap that exists.